Handling Message Failures On Distributed Systems

Introduction

In microservice architectures, reliable messaging between services via brokers like Kafka, RabbitMQ, or AWS SQS is vital. Yet, message delivery failures inevitably occur, risking data inconsistency and lost information. This guide dives deeply into engineering strategies for managing these message failures, focusing primarily on Dead-Letter Queues (DLQs), retry mechanisms, notification systems, client-side state preservation, and feature flags for intelligent retries.

1. Dead-Letter Queues (DLQs)

Concept

A Dead-Letter Queue holds messages that cannot be processed after several retries, allowing engineers to investigate or reprocess them separately.

Implementation (RabbitMQ Example):

channel.assertQueue('notes_queue', {

arguments: { 'x-dead-letter-exchange': 'notes_dlq_exchange' },

});

channel.assertExchange('notes_dlq_exchange', 'direct');

channel.assertQueue('notes_queue_dlq');

Recommended Practices:

- Set automated alerts for DLQ message count thresholds.

- Regularly inspect DLQs to resolve persistent issues.

2. Retry Mechanisms (Server-Side)

Exponential Backoff Strategy

Retries handle transient errors efficiently using exponential backoff, reducing pressure on dependent services.

Implementation Example (Node.js):

async function processMessageWithRetry(msg, retries = 5) {

let attempt = 0;

while (attempt < retries) {

try {

await processMessage(msg);

return;

} catch (error) {

attempt++;

const delay = Math.pow(2, attempt) * 1000;

await new Promise((resolve) => setTimeout(resolve, delay));

}

}

moveToDeadLetterQueue(msg);

}

Recommended Practices:

- Cap maximum retries to prevent cascading failures.

- Clearly log all retry attempts and final outcomes.

3. Notification and Monitoring Systems

Alerting on Message Failures

Real-time alerts ensure quick remediation of message-related issues.

Prometheus Alert Rule Example:

- alert: DLQSizeCritical

expr: rabbitmq_queue_messages{queue='notes_queue_dlq'} > 100

for: 5m

labels:

severity: critical

annotations:

summary: 'Critical DLQ size detected.'

Notification Channels:

- Slack, PagerDuty, Email

4. Client-Side State Management

Clients dependent on reliable message delivery should maintain a local state, ensuring no data loss occurs during message broker downtime or server restarts.

Local State Preservation Example:

function saveNoteLocally(note) {

const pendingNotes = JSON.parse(localStorage.getItem('pendingNotes') || '[]');

pendingNotes.push(note);

localStorage.setItem('pendingNotes', JSON.stringify(pendingNotes));

}

async function resendPendingNotes() {

const pendingNotes = JSON.parse(localStorage.getItem('pendingNotes') || '[]');

for (const note of pendingNotes) {

try {

await sendNoteToServer(note);

removeNoteFromLocalStorage(note.id);

} catch (e) {

// retry later

}

}

}

Best Practices:

- Regularly retry sending stored messages.

- Clearly mark and update the state of notes locally.

5. Feature Flags for Client-Side Retries

Feature flags enable controlled rollout and management of client-side retry logic, especially beneficial during server restarts or maintenance windows.

Example Feature Flag Configuration:

{

"features": {

"retry_pending_notes": true,

"retry_interval_seconds": 120

}

}

Client-side Usage Example:

if (featureFlags.retry_pending_notes) {

setInterval(resendPendingNotes, featureFlags.retry_interval_seconds * 1000);

}

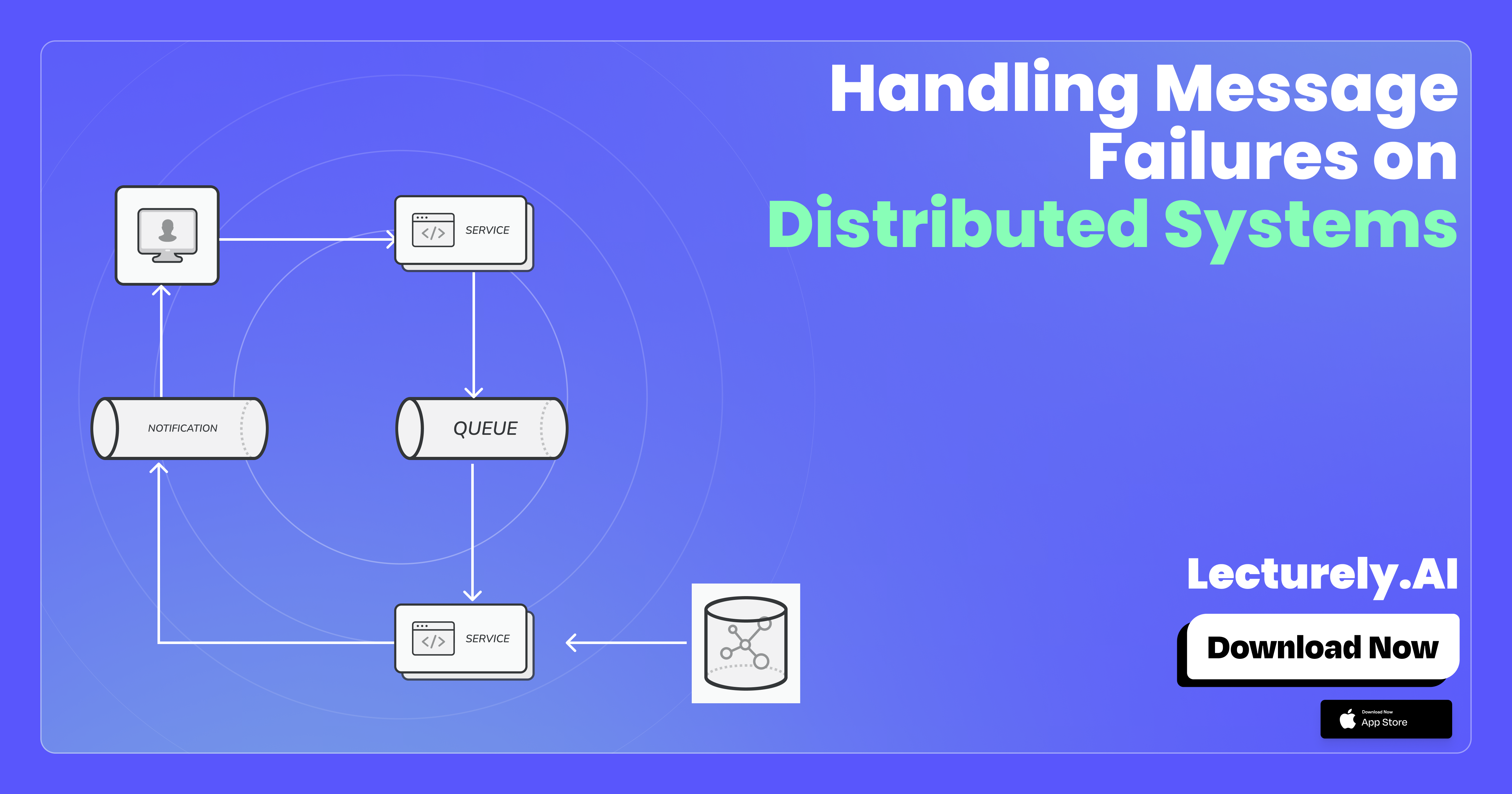

System Diagram

[Client (Saves State)] --> [Feature Flag Check]

|

v

[Broker Queue] --> [Consumer (Retries Processing)] --> [DLQ (Persistent Failures)]

|

v

[Monitoring & Alerts] --> [Notification System] --> [Engineering Team Intervention]

Best Practices Summary Checklist:

- ✅ Implement and monitor Dead-Letter Queues.

- ✅ Apply exponential backoff in retry mechanisms.

- ✅ Set up alerting systems for fast incident response.

- ✅ Preserve important application state on client-side.

- ✅ Utilize feature flags for client-side retry handling.

How Lecturely Uses These Engineering Practices

Lecturely, an AI-powered note-taking application, leverages these exact engineering strategies to ensure your notes are always safe and synchronized:

- Robust DLQ implementations to handle synchronization issues.

- Smart retry mechanisms guaranteeing no loss during temporary outages.

- Real-time failure notifications allowing rapid response.

- Local client-state management ensures offline reliability.

- Feature-flagged retries seamlessly managing sync retries post-server maintenance.

Never lose your valuable notes again. Download Lecturely today to experience secure, intelligent, and reliable note-taking powered by AI.

Thank You!