Ins And Outs Of A True Micro-Service Architecture

Ins And Outs of A True MicroService Architecture

Background and Evolution

Microservices architecture emerged from the need to overcome the limits of monolithic designs. Traditional applications integrated business logic, data access, and user interfaces into a single deployable unit, which complicated scalability and maintenance. By breaking applications into independently deployable services with well-defined APIs, modern architectures enable continuous improvement and targeted scalability. This evolution has been accelerated by RESTful APIs, container orchestration, and cloud technologies.

Common Misconceptions and Pitfalls

A frequent misconception is that adopting microservices automatically secures all the benefits of distributed systems. In practice, a poorly implemented microservices architecture may rely on shared resources. For example, a single database, which constrains true isolation between services. This setup, sometimes called a pseudo microservices approach, can lead to issues such as data contention and increased inter-service dependencies. Another pitfall involves underestimating the complexity of service management. The distributed nature of microservices requires robust strategies for inter-service communication, logging, and monitoring. Overlooking these aspects can result in performance bottlenecks and difficulties in troubleshooting.

Pseudo Microservices v/s True Microservices

The distinction between pseudo and genuine microservices centers on data management and service boundaries. In pseudo microservices, despite modular service code, the sharing of a single database undermines decoupling, potentially leading to transaction conflicts and data integrity issues. In contrast, true microservices ideally maintain independent data storage, ensuring that each service can evolve without unintended side effects on others.

Architectural Overview

In a properly implemented microservices ecosystem, each service is packaged along with its runtime environment, including independent storage. Services interact via lightweight protocols and may coordinate complex business transactions using patterns like Saga. This enables teams to update and deploy services autonomously while scaling resources selectively based on demand. This introductory discussion sets the stage for deeper exploration of microservices, the nuances of distributed transaction management, and the practical challenges of implementing true microservices architectures.

Definition and Core Principles

Microservices architecture structures applications as collections of loosely coupled, independently deployable services. Each service targets a specific business capability and operates within its own bounded context. This isolated operation supports independent scaling, targeted fault isolation, and focused domain modeling. Key principles include:

- Autonomy: Services manage their own runtime, deployments, and data persistence, reducing cross-service dependencies.

- Loose Coupling: Interactions occur through well-defined APIs, ensuring that changes in one service do not impact others.

- Fault Tolerance: Techniques such as circuit breakers and fallback strategies are integrated to enhance system resilience against localized failures.

- Composability: Services can be aggregated to form comprehensive applications, enabling reusability and flexible system assembly.

- Discoverability: Utilization of service registries or API gateways allows efficient tracking and seamless integration of services.

Design Considerations

The microservices architecture comprises several technical components:

- Service Instance: Each microservice is packaged independently, frequently within containers, which supports the use of orchestration tools like Kubernetes for scaling and management.

- Communication Patterns: Services interact using protocols such as REST or asynchronous messaging. This modular communication supports the reduction of dependencies and operational delays.

- Deployment Strategy: Continuous integration and deployment pipelines facilitate incremental updates. Independent deployment ensures that faults in one service do not require system-wide redeployment.

- Resiliency Patterns: Patterns such as the Saga for managing distributed transactions and CQRS for separating command and query responsibilities help tackle the inherent complexity of distributed systems. Many practitioners assume that migrating to a microservices architecture will inherently solve all design or scalability issues. This assumption may lead teams to adopt microservices without a thorough analysis of whether the complexity aligns with their organizational needs. For example, organizations operating at modest scales often find that the extensive overhead of distributed communication and decentralized data management may outweigh the benefits of modularity. A common error lies in equating microservices exclusively with cloud-native deployments. While microservices are often deployed in cloud environments, they are equally applicable in on-premises and hybrid configurations. Viewing them as a one-size-fits-all solution disregards the essential requirement of tailoring the architecture to address specific performance, maintainability, or scalability challenges.

Deployment, Scaling, and Maintenance Challenges

Transitioning to a microservices architecture can inadvertently escalate system complexity. Distributed systems require careful coordination, especially when handling cross-service communication. For instance, the challenges of managing distributed transactions and ensuring data consistency can significantly increase both development and operational overhead. As engineering teams expand their toolsets to include container orchestration, service discovery, and distributed tracing, the cumulative complexity may even result in a system described as a 'distributed monolith' if not planned carefully.

Another focal concern is the management of access control across an array of autonomous services. Decentralized security policies can introduce discrepancies and elevated risks if consistency is not maintained across the system. Despite the promise of technological agility, the diverse technological stacks chosen by different teams may further complicate unified management and troubleshooting.

Data Management and the Single Database Dilemma

Decentralized data management remains a cornerstone of true microservices architectures. Relying on a single database to serve multiple services reintroduces tight coupling and limits the scalability benefits of microservices. Empirical observations in industry deployments indicate that systems enforcing independent data stores for each service can reduce data contention issues by up to 30% while enhancing failure isolation. Accurate alignment between business capabilities and data management facilitates efficient isolation, enabling service-specific updates without triggering a domino effect across the application. In summary, a careful and critical evaluation of these misconceptions is essential. Organizations must navigate the challenges of distributed deployment, data decentralization, and continuous maintenance with well-defined strategies. The risks of unwarranted technological diversity, inadequate observability, and complexity in system integration demand that microservices be adopted with precise, context-driven goals and robust design patterns.

Defining Service Independence

Pseudo microservices arise when efforts to decompose a monolithic system yield components that share common resources, particularly databases. In these cases, services remain tightly coupled and require coordinated changes. This shared-data pattern undermines scalability and independent deployments, essentially reducing the architecture to a distributed monolith. A genuine microservice, by contrast, encapsulates its data store and logic. For instance, if Service A and Service B each maintain independent databases, a schema alteration in one does not necessitate parallel modifications in the other. This isolation ensures that each microservice functions autonomously, fulfilling core principles of distributed system design.

Best Practices to Ensure Genuine Service Independence

Organizations can mitigate pitfalls by adopting rigorous design and governance practices:

-

Decouple Data Stores: Each microservice should own its database. This separation not only enforces clear boundaries but also localizes potential faults to individual services.

-

Define Clear Service Contracts: Clearly delineated service interfaces and responsibilities help prevent overlap and ensure each service aligns with specific business capabilities.

-

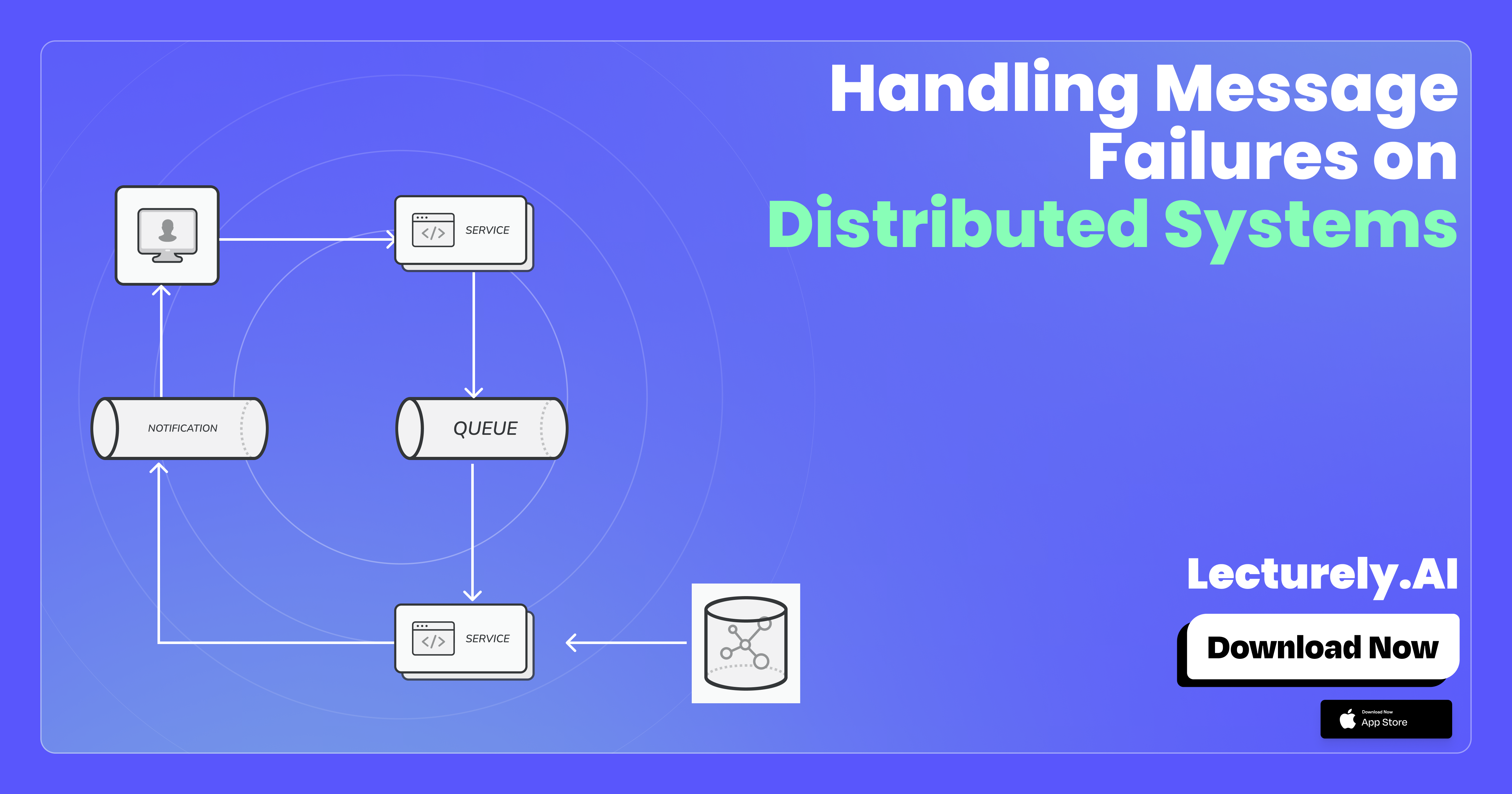

Embrace Asynchronous Communication: Leveraging message queues or event-driven architectures minimizes synchronous dependencies, thereby reducing latency and enhancing resilience.

-

Regularly Assess Coupling Metrics: Monitoring inter-service interactions and dependency coefficients allows teams to identify and rectify latent coupling issues.

By integrating these practices into design and operational protocols, organizations can transition from pseudo implementations to a more robust microservices ecosystem. One of the challenges of microservices architecture is handling faults, since the database scale independently managing transaction rollbacks or handling atomicity in read and write operation may pose challenges. To handle such situations, there is a concept called Saga. It is used by almost all large tech companies including Netflix, Ebay, Amazon. Let us examine what the Saga Architecture is and how it solves the issue of atomicity in terms of microservices.

Saga Architecture and Compensating Transactions

In contrast to the conventional ACID approach suitable for monolithic systems, the Saga pattern decomposes a global transaction into a series of local operations executed independently by distinct services. The pattern ensures eventual consistency by linking each operation with a corresponding compensating transaction that reverses the effect in case of failures.

Coordination Techniques

Two primary coordination methods guide saga execution: choreography and orchestration.

-

Choreography: Each service autonomously publishes domain-specific events after completing its operation. These events trigger subsequent actions in other services. While this approach minimizes central dependencies, it can lead to intricate event flows that are challenging to trace as system complexity increases.

-

Orchestration: A designated orchestrator directs the execution of individual service operations. This central control ensures a clear transaction flow and simplifies state management, although it may introduce a single point of failure. An orchestrator typically maintains a saga log that records each step, which is critical for executing compensating actions when necessary.

Mechanism of Compensating Transactions

In a distributed environment, failures in one service must trigger corrective measures in all preceding operations to maintain overall consistency. Each local transaction is paired with a compensating transaction, often designed to be idempotent and capable of handling partial failures. For example, in a retail order process, if a payment operation fails after an order is created and inventory reduced, compensating transactions may cancel the order and restore inventory levels.

The design of compensating transactions requires careful consideration of business logic. Factors such as non-reversible actions or partial refunds can influence how compensations are implemented. This complexity necessitates detailed tracking of each transaction step and corresponding rollback procedures.

Implementation Considerations

Practitioners typically implement the Saga pattern using message brokers or RESTful communication. Consider the following pseudocode example for an orchestration-based saga managing an order process:

function processOrder(orderData) {

let transactionLog = [];

if (createOrder(orderData)) {

transactionLog.push('orderCreated');

if (reserveInventory(orderData)) {

transactionLog.push('inventoryReserved');

if (processPayment(orderData)) {

transactionLog.push('paymentProcessed');

confirmOrder(orderData);

} else {

// Payment failure: execute compensating transactions

compensateInventory(orderData);

compensateOrder(orderData);

}

} else {

// Inventory reservation failure: execute compensating transactions

compensateOrder(orderData);

}

} else {

console.error('Order creation failed');

}

}

This sample illustrates the sequential execution of service operations and the immediate invocation of compensating transactions upon failure. The transaction log assists in tracking progress and supports the effective rollback of successful steps.

Performance and Reliability Considerations

Quantitative analysis of saga implementations often reveals minimal overhead under normal operations. However, when failure rates exceed 5%, the frequency of compensating transactions increases, potentially impacting overall throughput. Designing fault-tolerant compensatory strategies is essential for systems handling high transaction volumes. Additionally, distributed logging and monitoring mechanisms play a vital role in diagnosing issues and ensuring rapid recovery in production environments.

Implementation Example: Saga Coordination Using Pseudocode

Below is an illustrative pseudocode sample demonstrating Saga orchestration within an order processing scenario:

class OrderSaga:

def execute_order(self, order):

try:

if not self.reserve_inventory(order.id):

self.compensate(order.id, 'inventory')

return

if not self.process_payment(order.payment_info):

self.compensate(order.id, 'payment')

return

self.dispatch_order(order.id)

except Exception as ex:

self.handle_failure(order.id, ex)

def reserve_inventory(self, order_id):

# Logic to reserve inventory

return True

def process_payment(self, payment_info):

# Logic to process payment

return True

def dispatch_order(self, order_id):

# Logic to dispatch order

pass

def compensate(self, order_id, step):

# Execute compensating transaction based on failed step

print(f'Compensating for failure at {step} for order {order_id}')

def handle_failure(self, order_id, error):

# Log and manage unexpected failures

print(f'Order {order_id} failed: {error}')

Key Insights

- Independent microservices facilitate targeted security improvements, a critical factor in sensitive sectors like finance.

- The Saga Pattern provides a robust framework for managing multi-step transactions in e-commerce, ensuring that compensatory actions maintain data integrity in the wake of failures.

- Centralized configuration coupled with individual service logging enhances both maintenance efficiency and system reliability.